AI Assisted Risk Search

As part of a broader initiative to explore how AI could enhance regulatory workflows, our team set out to solve one of the most time-consuming and error-prone parts of the medical device submission process: determining the correct product code and identifying potential hazards and harms for FDA submissions.

These tasks, essential for compliance and risk assessment, often required teams to spend weeks manually combing through FDA and International Medical Device Regulators Forum (IMDRF) databases or paying external consultants thousands of dollars. We saw an opportunity to reduce this burden by surfacing this information directly in our platform, where users already managed their device documentation.

My role: Design Lead, Discovery Support

Team: Design + Product Manager + Data Science Team

The Problem

When submitting a new medical device for FDA approval, companies must assign their product a product code and complete a risk matrix outlining potential hazards, harms, and probabilities of adverse events.

Today, this process is slow, manual, and error-prone.

- To find a product code, users often spend days or weeks searching through the FDA’s MAUDE database or hire costly consultants.

- To build a risk matrix, they manually review years of reports from the IMDRF database and brainstorm events from scratch - a process that’s time-consuming and risks overlooking key information.

The Opportunity: Incorporating AI Into the Product

We started with two hypotheses that we wanted to dig into and test:

- Customers would rather search for product codes and hazards within the system where they already manage device documentation.

- Given a device description, users would find value in receiving a starting list of potential hazards and harms.

To test this hypothesis, we partnered with our data science team to train an AI model on the Manufacturer and User Facility Device Experience (MAUDE) and IMDRF databases. The goal was to give users relevant product code and risk information in seconds or minutes rather than weeks.

Discovery

I led early design discovery efforts to clarify the problem space and guide prioritization for a proof of concept.

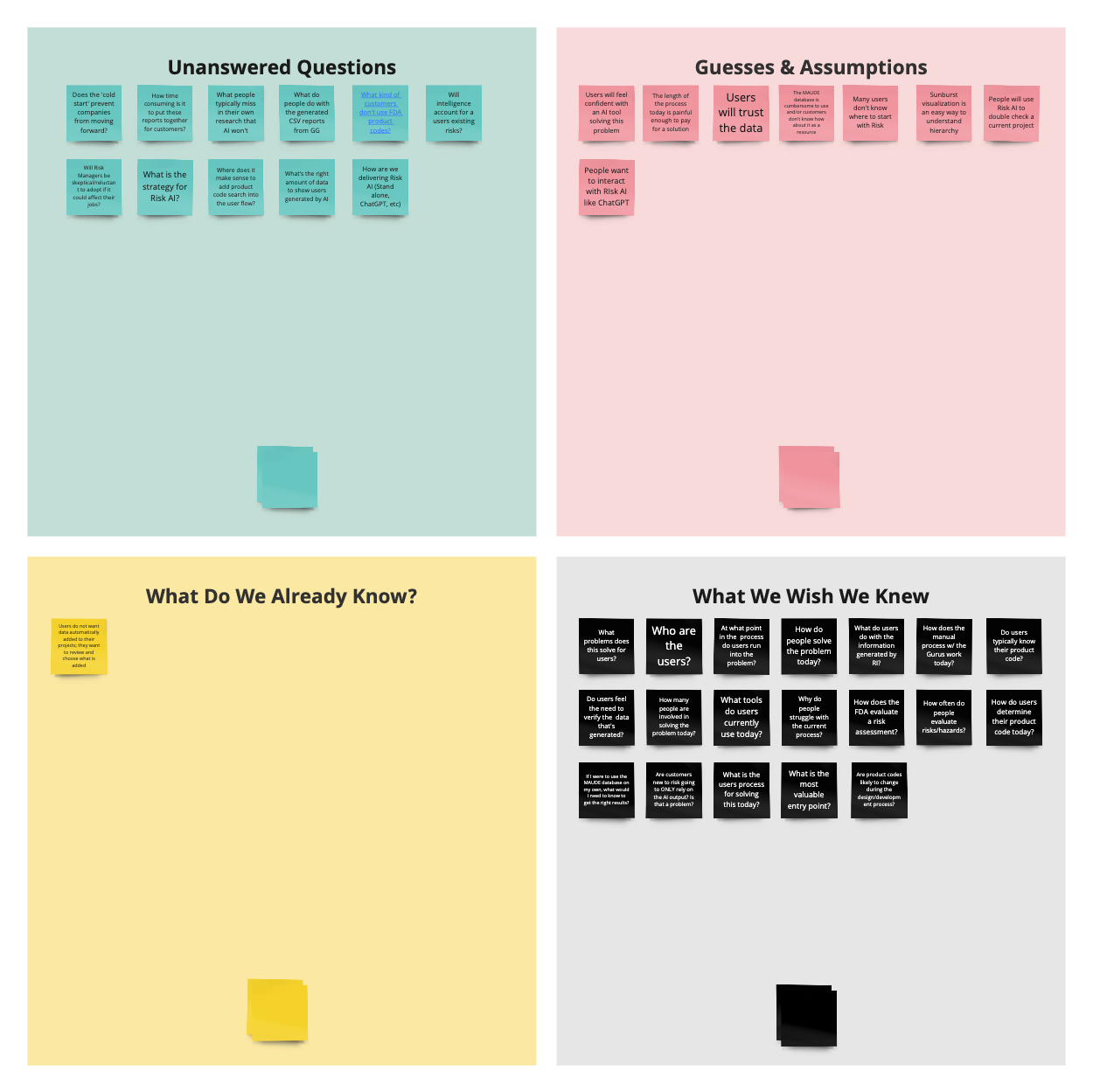

We began with a question matrix, documenting what we knew, what we assumed, and what we needed to validate. We evaluated each knowledge gap’s risk to the project and prioritized areas needing research before moving forward.

Sticky notes describing our unanswered questions, guesses and assumptions, what we already knew, and what we wished we knew.

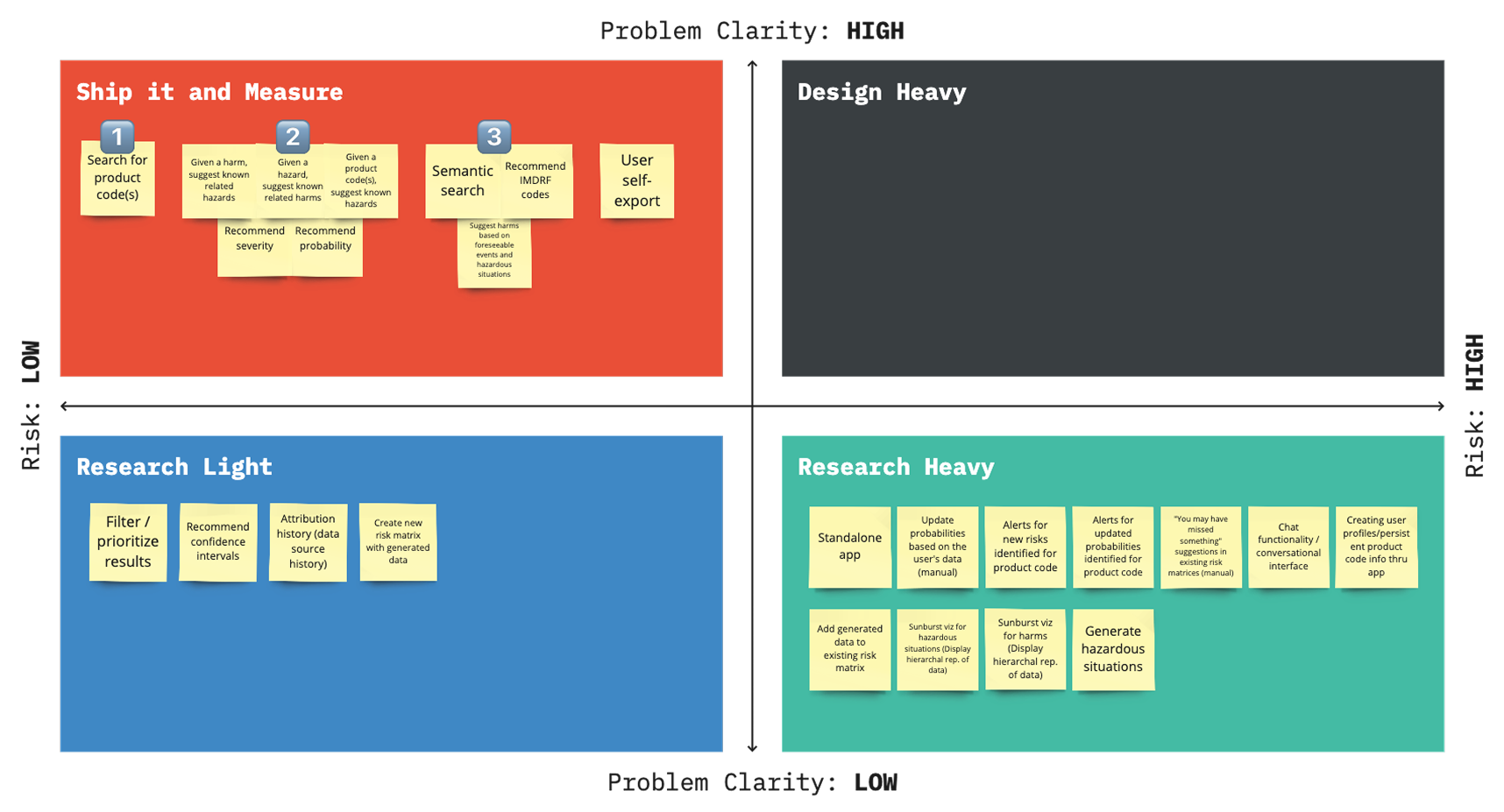

Next, we ran a problem clarity vs. risk assessment session to map potential outcomes for users and prioritize which ideas were both feasible and impactful. This helped us identify the top three “Ship it and Measure” opportunities - lightweight experiments that would quickly validate our assumptions: searching for a product code, recommending hazards, harms, severity, and probability, and semantic search.

Sticky notes describing what features we determined could be categorized as Ship and Measure, Design Heavy, Research Light, and Research Heavy.

Proof of Concept & Feedback

To move fast, the data science team built a proof of concept external search tool that would provide hazard and harm recommendations to demonstrate viability. When we shared this with customers, their feedback was clear:

“This is helpful, but I’d still need to manually transfer everything into the app - it doesn’t actually save me much time.”

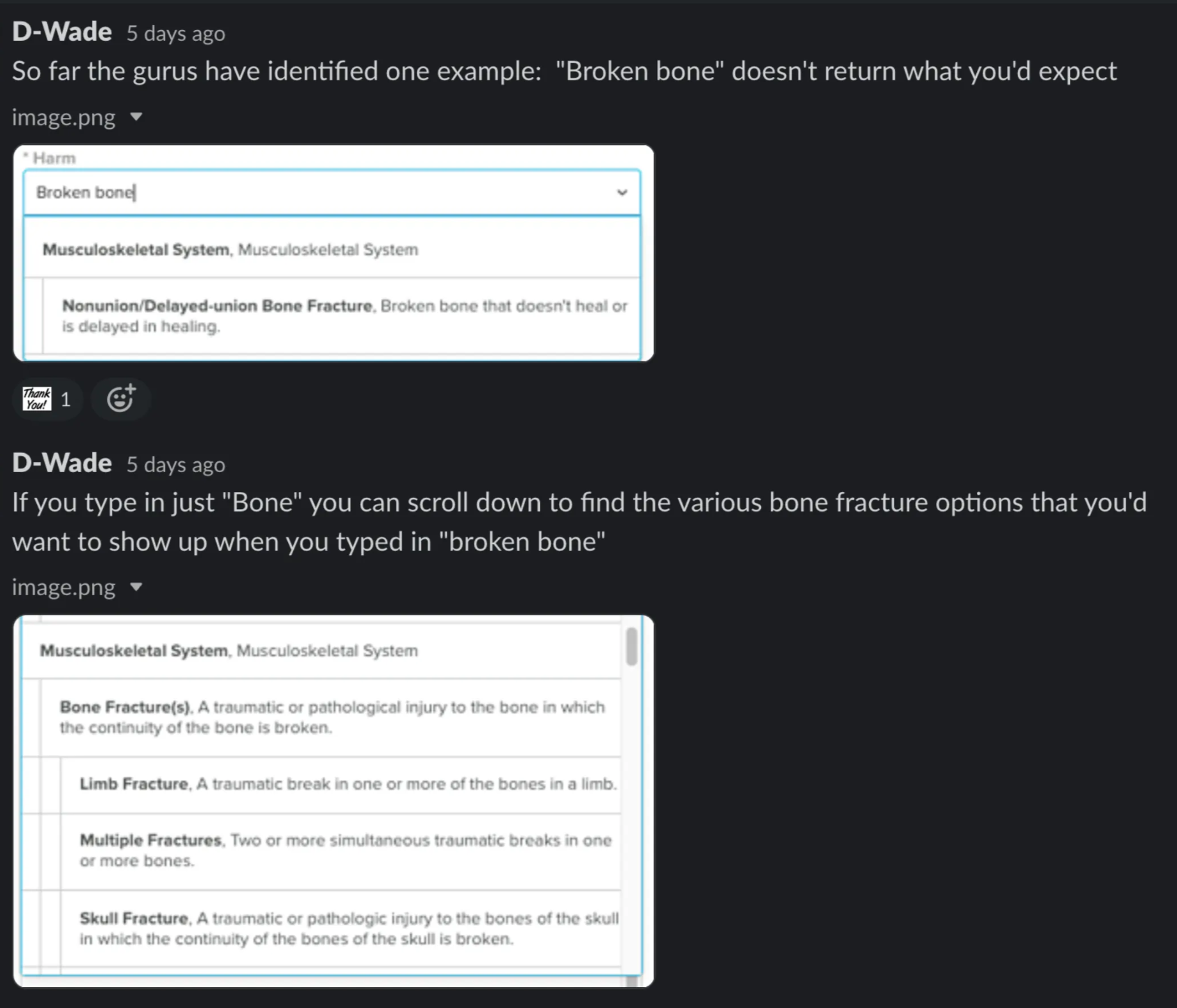

We also learned that while users could already search a pre-populated list of hazards and harms within the app, it required exact terminology. Many couldn’t find the terms they were looking for, or weren’t sure what to search for in the first place.

A Slack conversation with one of our medical device "gurus" sharing how a non-exact search terms could be critical when trying to find the right harm.

This insight led us to phase one of our in-app AI integration: a natural language search feature that helped users find hazards and harms using plain English - a first step toward auto-generating risk profiles from device descriptions.

Design

Our challenge was to introduce AI-assisted search in a way that felt familiar and low-risk to users.

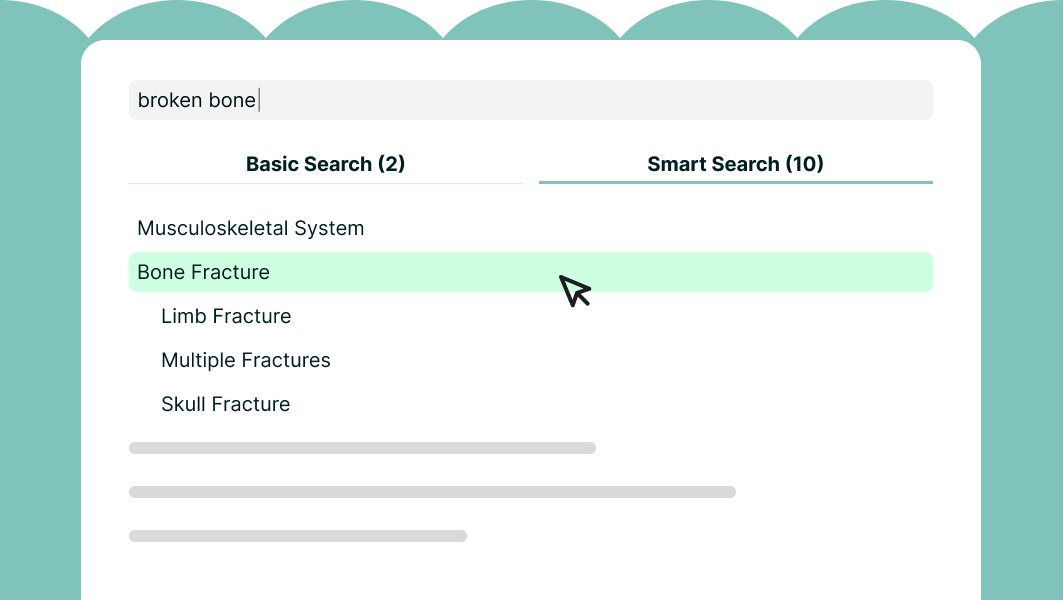

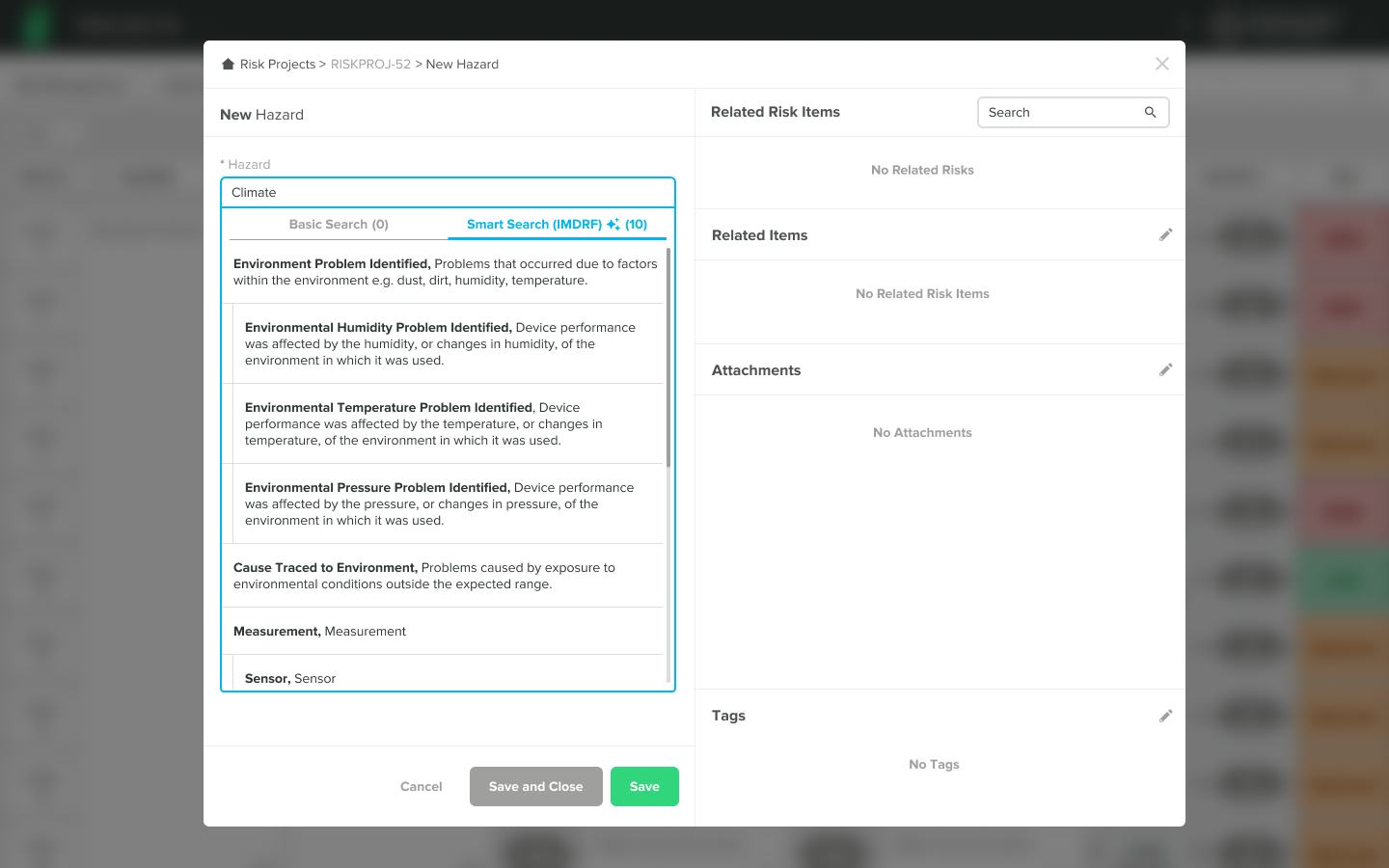

At this stage, the model didn’t have access to custom hazard and harm entries, so I designed a tabbed search interface that displayed results from:

- Existing custom hazards (created by the user)

- Semantic search results (suggested by the AI model)

This approach allowed us to test the AI-assisted search alongside the existing experience without disrupting current workflows.

Our team decided on "Smart Search IMDRF" as the title for the tab to communicate that it was AI assisted and would give more robust results.

Showing the initial dropdown for the search with a tab for Basic Search and a tab for the AI Smart Search (IMDRF), with examples of how people can get started with searching.

Showing an example of search results in the Smart Search (IMDRF) tab for the word "Climate", which did not return any results in the Basic Search tab.

Results

Within a few months of launch, analytics showed that users selected semantic search results 40% of the time - a strong indicator that the model was surfacing meaningful, relevant results.

Unfortunately, when the internal data science team was later disbanded, we had to sunset the feature since the model could no longer be supported.

However, the experiment provided valuable insights into how AI could be safely and effectively introduced into regulated workflows.

What We Learned

This project was our organization’s first real AI experiment within the app. It validated that users were open to AI assistance - when it was delivered transparently and in a way that complemented their existing processes.

Key takeaways:

- Early customer feedback could have saved development time. However, the business prioritized proof of concept speed to secure buy-in for future AI initiatives.

- Transparency and control were crucial. Users trusted the AI when they could clearly distinguish AI results from their own data.

- Even small experiments can unlock major learning. Our semantic search test became the foundation for later discussions around AI-driven document authoring and auto-classification features.

Though the feature was eventually sunset, the project demonstrated:

- A viable path to reduce compliance workload using AI

- Tangible interest from customers in AI-assisted decision support

- A framework for designing AI transparency and user control in regulated tools

This initiative paved the way for future exploration of generative AI features in our product suite - and taught our team how to balance innovation speed with user validation.

More Case Studies

Reserach • collaboration

Configurable Notifications

Redesigned how users manage system notifications, improving ease of use and boosting confidence for Quality and Regulatory teams.

Complex Problem • Testing

Flexible Permissions

Redesigned a rigid, role-based permissions system into a flexible, scalable experience - grounded in user research and validated through usability testing.